From Keycloak to Cognito: Building a Self-Hosted Terraform Registry on AWS

- Oleksandr Kuzminskyi

- October 26, 2025

Table of Contents

Introduction - Why Host a Private Terraform Registry

Every DevOps team depends on Terraform modules - they’re the building blocks of your infrastructure. And like any dependency, when the source disappears or becomes unreliable, the whole stack shakes.

For a long time, the public Terraform Registry was good enough.

It’s convenient, searchable, and integrates natively with terraform.

But convenience has limits when you start depending on it for production.

One practical reason to host my own registry was version control.

Only the Terraform Registry protocol - whether public or self-hosted - supports semantic version ranges,

allowing me to pin modules to a major version line like ~> 1.0.

This gives teams freedom to upgrade minor versions automatically, while staying safe from breaking changes.

Other distribution methods (Git repositories, HTTPS servers, local paths) don’t support this capability at all.

Even though, over time, I began locking modules to exact versions and using Renovate to upgrade pinned dependencies,

I wanted to preserve that option for when it matters.

The second reason came from experience - and a painful one. Once, the public Terraform registry went down. Not entirely - it was partially available. I could pull existing versions, but I couldn’t publish a new one. The outage lasted four days, and their status page showed “all green” the entire time. Support didn’t acknowledge the issue until nearly a week later. That moment changed my perspective. If I ever needed to push an urgent fix or release, I’d have been blocked by someone else’s outage.

The conclusion was obvious: if your infrastructure depends on Terraform, you need control over your own registry. Self-hosting isn’t about vanity or paranoia - it’s business continuity. It’s about ensuring the most critical part of your delivery pipeline remains in your hands.

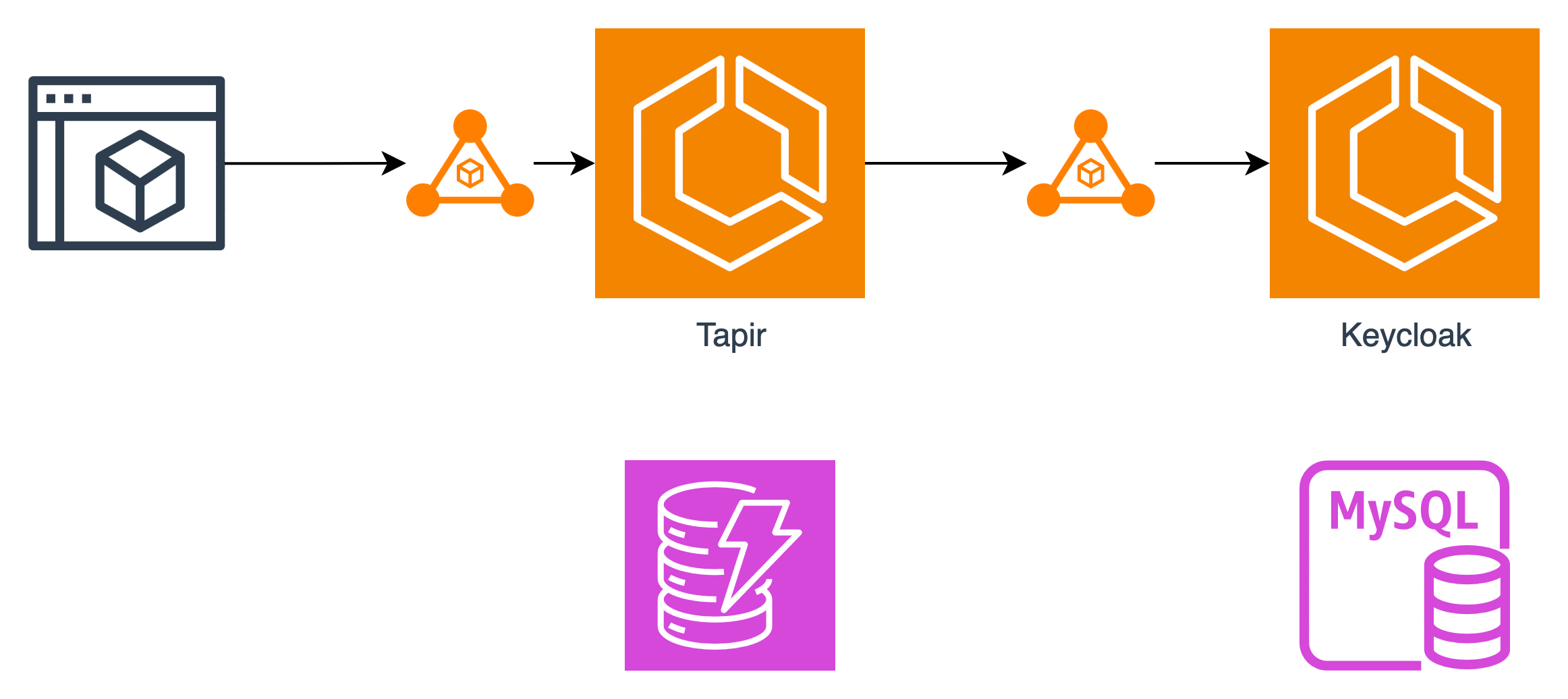

Phase 1 - Tapir + Keycloak: The First Version

When I began looking for a self-hosted Terraform Registry implementation, I didn’t start with a grand architectural plan - I simply followed what other engineers had already made work. A few community threads and blog posts mentioned Tapir as a solid open-source implementation of the Terraform Module Registry Protocol, so I gave it a try.

Tapir turned out to be easy to deploy and well-maintained, so I decided to use it as the core of my registry. In its documentation, Tapir explicitly says “Tapir integrates well with Keycloak.” That was enough of an endorsement for me. Keycloak looked mature, supported OpenID Connect out of the box, and was widely adopted - so I set it up as the identity provider.

The deployment worked. Clients could read modules from the registry, and CI/CD pipelines could publish new versions. It was a good learning experience.

Tapir itself was relatively lightweight - it stores module metadata in DynamoDB and artifacts in S3, both fully managed AWS services. Keycloak, on the other hand, was the heavy part of the stack. It required a dedicated MySQL RDS instance to persist its state and an Application Load Balancer to handle HTTPS for the ECS service.

Problem #1 - Complexity & Cost

For what was essentially a single-user authentication flow, I was suddenly maintaining a full microservice stack - and paying about $35–40 per month for the privilege.

The stack included an ECS service running Tapir and Keycloak, an RDS MySQL instance, and an Application Load Balancer. For a simple authentication flow, the operational complexity and monthly cost were higher than necessary. This prompted me to look for a more lightweight, AWS-native alternative.

Problem #2 - Load Balancer Stickiness & Reliability

Once Tapir and Keycloak were running, authentication worked - but only when everything lined up perfectly.

The issue was subtle: Tapir depends on maintaining a consistent session with the same identity provider (IdP) instance. If a user’s authentication flow starts on one Keycloak container but the next request lands on another, the session breaks. The result is a failed login.

In theory, AWS Application Load Balancer (ALB) can handle this through stickiness, ensuring all requests from a single client go to the same target for a set duration. In practice, it didn’t work reliably with Keycloak behind ECS. Either the session cookie wasn’t respected, or the connection jumped between containers during scaling events - especially during deployments or when ECS recycled tasks.

That meant Tapir would occasionally send an OAuth callback to a different Keycloak instance than the one where the session began. And because Keycloak stores session state locally (not in the database), the second instance had no idea what was going on. Authentication simply failed.

After several frustrating rounds of debugging and tuning ALB stickiness attributes, I ended up taking the simplest possible approach: run one Tapir container and one Keycloak container. No scaling. No load balancing across multiple targets.

It was the only configuration that worked reliably - but it wasn’t highly available. Every ECS or AMI update, or even a rolling deployment, meant a few minutes of downtime. And if the EC2 host went down, the entire registry went with it.

I could live with the single-instance setup for a while, but it was clear that this architecture had reached its limits.

Decision Point - Keycloak Must Go

At some point, I realized I was spending more time maintaining authentication than using it. Running Keycloak in AWS felt like keeping a second platform alive just to issue tokens.

Keycloak is powerful - but it’s also heavy. It requires a database, a load balancer, IAM roles, patching, and a small budget line of its own. For a service authenticating just one or two engineers, it was far too much infrastructure.

So I started exploring alternatives.

I looked into Authentik, Zitadel, FusionAuth, and AWS Cognito - all modern OIDC providers, each with its own trade-offs between simplicity and flexibility.

My selection criteria were simple and pragmatic:

- ✅ OIDC must actually work with Tapir - not every provider does. Even fully spec-compliant IdPs can fail in subtle ways: claim naming, redirect URI handling, or token formatting. The devil is always in the details.

- 💵 Reasonable cost - preferably close to zero for a few active users.

- 🧩 Easy to deploy with Terraform - since the rest of the Tapir stack was already managed that way, authentication should be too.

Cognito checked all three boxes.

It’s AWS-managed, supports OpenID Connect out of the box, integrates easily with ECS and ALB, and costs nothing at small scale. Most importantly, there’s no database to manage, no load balancer for the IdP itself, and no servers to patch.

From that point, the choice was clear - Keycloak had to go. The new goal was to make Tapir work with Cognito - and package the entire setup as a single Terraform module.

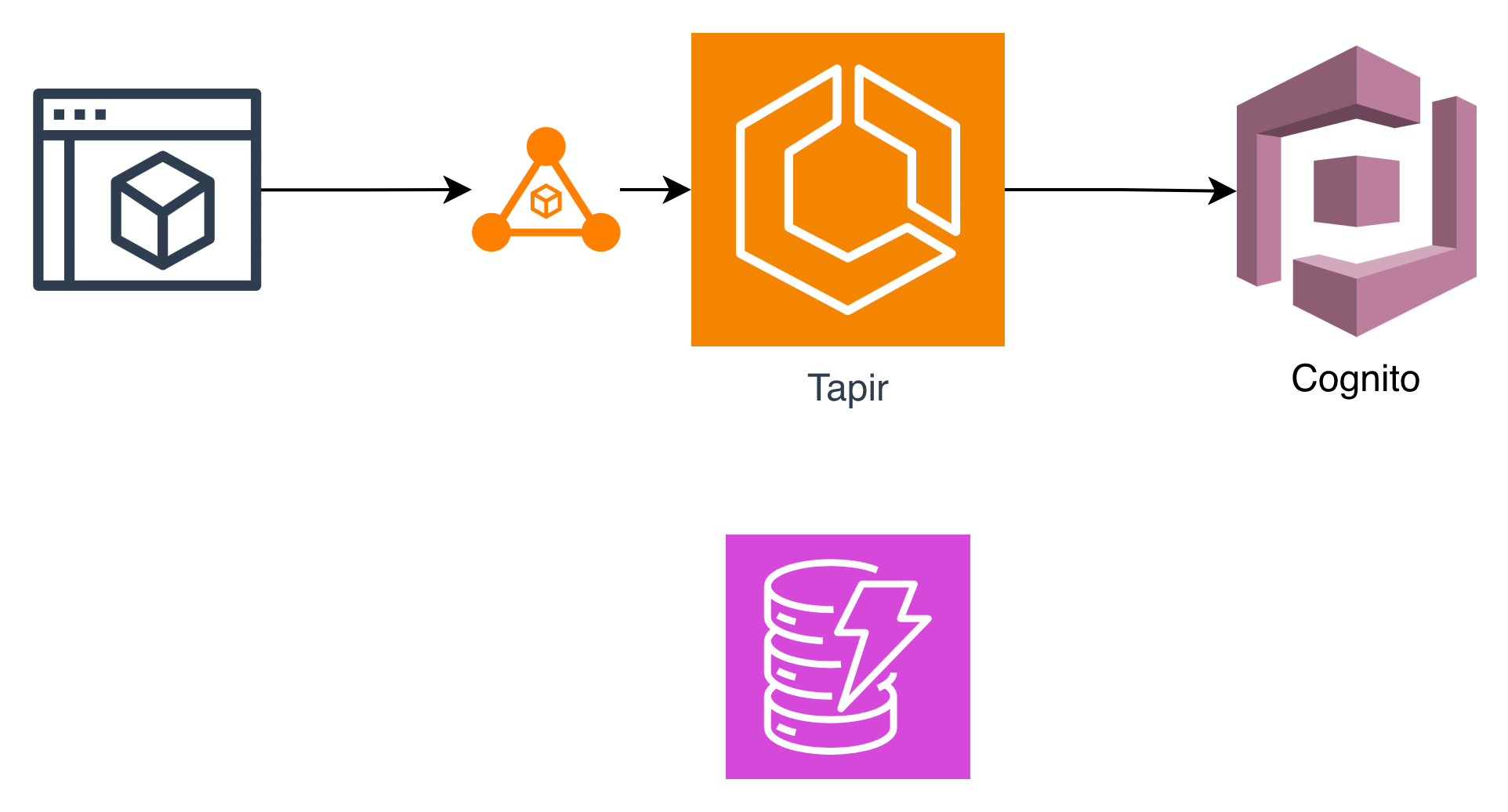

Phase 2 - Rebuilding with Cognito

After retiring Keycloak, I wanted a clean start - something reproducible, simple to maintain, and fully automated through Terraform. The goal was to build a single Terraform module that could deploy everything needed for a private Terraform registry: Tapir, Cognito, and all the supporting AWS components.

For the deployment, I used the InfraHouse ECS module - the same ECS module that InfraHouse uses internally to run production workloads. That choice immediately solved several problems: it provisions an ECS service, an Application Load Balancer, HTTPS termination, and an ACM certificate - all out of the box. That freed me to focus entirely on Tapir and Cognito integration rather than reimplementing infrastructure plumbing for the hundredth time.

The resulting architecture was clean and minimal:

- 🐳 ECS service running Tapir on EC2-backed instances.

- 🔒 Application Load Balancer handling HTTPS with ACM.

- 👤 AWS Cognito providing authentication: a User Pool, App Client, and Hosted UI Domain.

All of it defined in Terraform, so the entire stack could be rebuilt or destroyed in minutes.

The first deployment, naturally, didn’t go perfectly. I ran into three issues almost immediately:

Redirect mismatches

Tapir sent HTTP callback URLs and Cognito refused them, throwing

InvalidParameterException: cannot use the HTTP protocol.

The fix came from Quarkus itself:

QUARKUS_OIDC_AUTHENTICATION_FORCE_REDIRECT_HTTPS_SCHEME=true

With that flag, Tapir started issuing HTTPS redirects automatically, even behind an internal ALB.

Another subtle issue came from a trailing slash mismatch between the callback URL configured in Cognito

and the redirect URI Tapir sent during authentication.

Cognito treats https://registry.example.com and https://registry.example.com/ as distinct URLs,

so even a single slash at the end caused confusing Auth Flow Not Enabled for This Client errors.

The fix was simple but non-obvious - register both variants as callback URLs:

callback_urls = [

local.registry_url,

"${local.registry_url}/",

]

Lesson: treat trailing slashes in OAuth2 redirect URIs as distinct; register both.

Once both were listed, Cognito accepted redirects consistently, regardless of how Tapir or the browser formatted the URL.

Health checks

Tapir doesn’t yet expose /q/health/ready or /q/health/live endpoints, so ALB health checks were redirected to Cognito.

The workaround: configure the target group to treat HTTP 302 as a healthy response.

Not elegant, but stable enough until Tapir implements SmallRye Health.

Roles and Admin Permissions

Once authentication worked, authorization was the next hurdle. Tapir’s management interface isn’t open to all authenticated users - it expects a specific claim in the token:

"role" : "admin"

Unfortunately, AWS Cognito doesn’t issue this claim by default.

Instead, it places users’ group memberships under:

"cognito:groups" : ["admin"]

My first fix was the “AWS-standard” approach: a Lambda trigger on the pre_token_configuration_type lambda_config

in the aws_cognito_user_pool.

The Lambda intercepted tokens and injected the role claim before they reached Tapir. It worked, but it was fragile - another moving part to deploy, secure, and maintain.

Eventually, I realized the problem could be solved cleanly at the application level. Quarkus (the Java framework Tapir uses) provides a configuration property that tells it where to find roles in an incoming token:

QUARKUS_OIDC_ROLES_ROLE_CLAIM_PATH=cognito:groups

With that single line, Tapir began mapping Cognito’s cognito:groups directly to roles - no Lambda, no triggers,

no extra infrastructure.

The configuration is cleaner, faster to deploy, and much easier to reason about.

Debugging JWT tokens and diving into Quarkus OIDC docs turned out to be surprisingly educational. A quick Bash script and jwt.io made token debugging easier:

POOL_ID="us-west-2_QE7X4GKfI"

CLIENT_ID="7v4senofi1m0vom9flplv1ruu4"

CLIENT_SECRET="very-secret-secret-issued-by-cognito"

USERNAME="aleks@infrahouse.com"

PASSWORD='my-password-in-cognito'

# Required for password-based CLI testing with admin-initiate-auth:

# explicit_auth_flows = ["ALLOW_ADMIN_USER_PASSWORD_AUTH", "ALLOW_REFRESH_TOKEN_AUTH"]

SECRET_HASH=$(printf '%s' "${USERNAME}${CLIENT_ID}" \

| openssl dgst -sha256 -hmac "${CLIENT_SECRET}" -binary \

| openssl base64)

aws cognito-idp admin-initiate-auth \

--user-pool-id "$POOL_ID" \

--client-id "$CLIENT_ID" \

--auth-flow ADMIN_USER_PASSWORD_AUTH \

--auth-parameters "USERNAME=${USERNAME},PASSWORD=${PASSWORD},SECRET_HASH=${SECRET_HASH}" \

--query 'AuthenticationResult.IdToken' --output text

By decoding the resulting token in jwt.io, I could confirm that the expected claims -

including "role": "admin" and "cognito:groups" = ["admin"] - were present and readable by Tapir.

This made troubleshooting transparent and reproducible, without guesswork or trial-and-error.

Once these issues were ironed out, everything clicked into place. Cognito handled authentication seamlessly, Tapir recognized users correctly, and the entire setup could be deployed in one Terraform apply - reproducible, minimal, and lean.

Testing the Module with Pytest

I added a pytest-based test

that brings the module up from scratch using

Terraform fixtures.

The test currently validates against AWS provider v5 and v6, so I catch regressions across both lines.

For now, the assertion is intentionally simple - a successful terraform apply is considered

a pass-but this harness gives me room to grow: I can later script a full end-to-end check

(publish a sample module, fetch it via the registry API, validate auth flows, etc.).

This test bed also sets the stage for Tapir upgrades (I’m on 0.7 today; 0.9 is available),

letting me verify that critical registry functionality still works before I roll forward.

Outcome - A Lightweight, Fully Managed Registry

The final result is a fully self-contained Terraform module that deploys a private Terraform registry powered by Tapir and AWS-native services - no manual steps, no third-party dependencies, and no persistent database.

Everything - ECS service, Application Load Balancer, ACM certificates, Cognito user pool, and DNS records - is created automatically through Terraform. Users are managed entirely by AWS Cognito, which sends temporary passwords via email and enforces a password change on first login.

Unlike the earlier Keycloak setup, this version has no RDS instance and no EC2 hosts to maintain. It’s lean, reliable, and entirely managed by AWS infrastructure primitives.

Despite the simplicity, it’s production-ready - with HTTPS termination, IAM integration, and an isolated user directory. And best of all, it’s 100% Terraform-managed: everything is declarative; no AWS Console clicks are required.

Lessons Learned

Building this module wasn’t just about wiring AWS resources together - it was about understanding how every moving piece in an authentication flow fits.

AWS Cognito OIDC can replace a full identity stack. It’s surprisingly capable once configured correctly. You get user management, email verification, password policies, and OIDC compliance without managing any servers or databases.

Quarkus configuration variables (

QUARKUS_*) are powerful once you understand their structure. Once I realized thatQUARKUS_OIDC_ROLES_ROLE_CLAIM_PATHmaps toquarkus.oidc.roles.role-claim-path, everything about fine-tuning Tapir’s authentication behavior suddenly made sense.Manually debugging tokens teaches you the real mechanics of OIDC. Using a short Bash script and jwt.io to decode ID tokens revealed exactly what Cognito was returning - and what Tapir was expecting.

Removing code is the best optimization. The project started with a custom Lambda trigger to rewrite claims, but ended with a single environment variable. Fewer moving parts, faster deployments, easier maintenance - and a cleaner design overall.

Conclusion - Pragmatic Simplicity

Hosting your own Terraform registry isn’t about vanity or redundancy - it’s about control. When the public registry becomes unreliable or slow, having your own gives you confidence and autonomy over a critical part of your infrastructure lifecycle.

The final setup with Tapir + AWS Cognito strikes a balance between simplicity and capability. No heavy databases, no Keycloak maintenance, and no custom authentication code - just Terraform resources, AWS primitives, and clear configuration.

The result is a clean, maintainable, and fully automated Terraform module that deploys a private registry end-to-end. It’s easy to reproduce, easy to extend, and easy to trust.

Looking ahead, I hope to see:

- Native health check endpoints in Tapir (SmallRye Health integration would be ideal).

- Adding an end-to-end test to upload and use Terraform sample module.

- Upgrade to Tapir version 0.9

In the end, this project reaffirmed a simple truth: simplicity takes work.

If you’re considering running your own Terraform registry, check out: 👉 infrahouse/terraform-aws-registry on GitHub or deploy it directly from the Terraform Registry. Contributions, feedback, and ideas for new integrations are always welcome.